Building ETL Pipelines with Python

José Rafael Gutierrez

1 year ago

Introduction

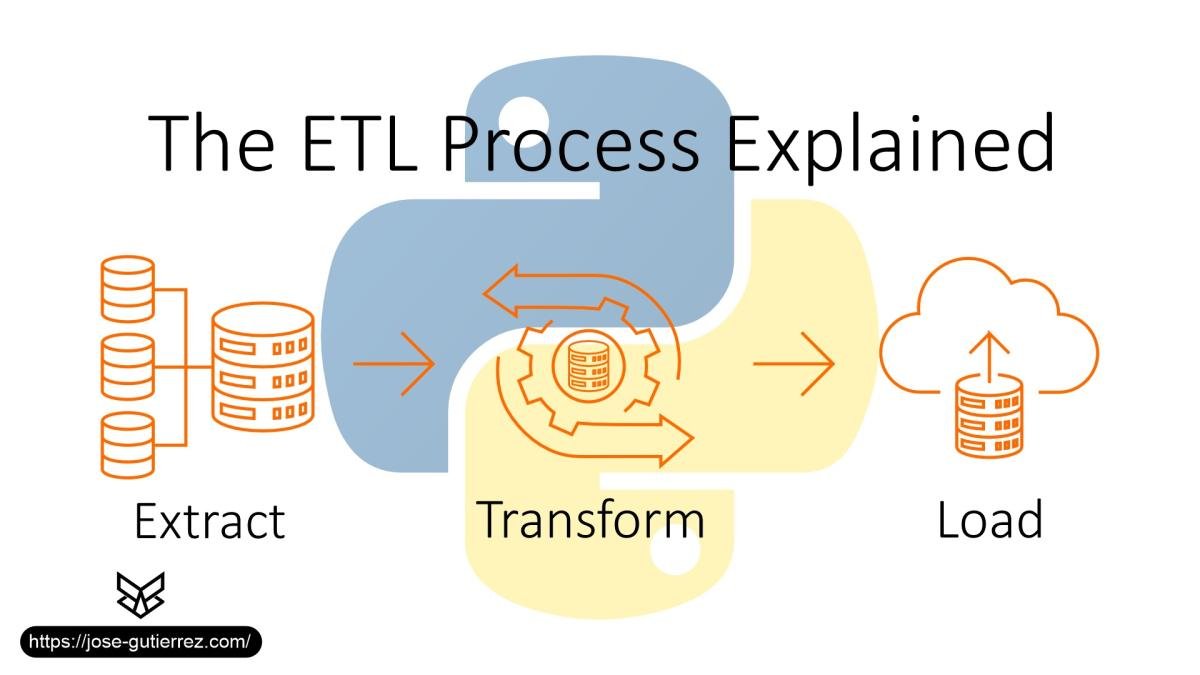

In data engineering, ETL pipelines (Extract, Transform, Load) are essential for gathering and processing data from multiple sources and loading it into a target database. An effective ETL pipeline helps clean, structure, and transform raw data into a format that provides valuable insights. In this article, we’ll demonstrate how to build an ETL pipeline using Python and libraries like pandas, SQLAlchemy, and Airflow, applying best practices and clean code principles to ensure readability, efficiency, and scalability.

We’ll explore each step of the ETL process with real-world use cases, from data extraction, transformation with Python and pandas, to loading data into an SQL database.

Sections

- Understanding ETL Pipelines and Their Importance

- Setting Up the Environment

- Extracting Data from Sources

- Transforming Data Using Pandas

- Loading Data into a Database

- Automating the ETL Process with Airflow

- Best Practices and Clean Code Principles for ETL

1. Understanding ETL Pipelines and Their Importance

An ETL pipeline is a workflow that processes data in three stages:

- Extract: Data is gathered from various sources, such as databases, APIs, or files (e.g., CSV or JSON).

- Transform: Raw data is cleaned, normalized, and transformed to fit the target database’s structure and needs.

- Load: Transformed data is loaded into a target database or data warehouse for further analysis or reporting.

ETL pipelines are essential for businesses to efficiently manage data from multiple systems, ensuring consistency, availability, and easy access to data for analysis teams.

2. Setting Up the Environment

To follow this article, make sure you have Python installed along with the necessary libraries. You will need:

- pandas: For data transformation and manipulation.

- SQLAlchemy: For connecting to and interacting with SQL databases.

- Airflow: For automating and scheduling the ETL pipeline.

Install these dependencies via pip:

pip install pandas sqlalchemy apache-airflow

3. Extracting Data from Sources

Example Scenario: Extracting Data from a CSV File

In this example, we’ll extract data from a CSV file containing product information. Assume the data is stored in a file named products.csv.

Code to Extract Data

import pandas as pd

# Extract data from CSV

def extract_data(file_path):

try:

data = pd.read_csv(file_path)

print("Data extraction successful!")

return data

except Exception as e:

print(f"Error extracting data: {e}")

return None

# Example usage

file_path = 'products.csv'

raw_data = extract_data(file_path)

Explanation:

-

Extract Function: We define the

extract_datafunction to read data from the specified file path. - Error Handling: As a clean code practice, we use error handling to capture issues like missing files or incorrect formats.

This function extracts raw data, ready for the next step in the pipeline: transformation.

4. Transforming Data Using Pandas

Cleaning and Normalizing Data

In this transformation step, we’ll clean and normalize the data. Let’s assume our raw data contains fields with missing values or inconsistent formats, which we’ll clean using pandas.

Transformation Code

def transform_data(data):

# Drop rows with null values

data.dropna(inplace=True)

# Normalize text columns to lowercase

data['product_name'] = data['product_name'].str.lower()

# Convert price to numeric format

data['price'] = pd.to_numeric(data['price'], errors='coerce')

# Ensure no negative prices

data = data[data['price'] >= 0]

print("Data transformation successful!")

return data

# Transform raw data

cleaned_data = transform_data(raw_data)

Explanation:

- Null Value Handling: We drop rows with missing values.

- Text Normalization: Converting text fields to lowercase for consistency.

- Data Type Conversion: Converting the price column to a numeric type for further calculations.

- Data Validation: Removing rows with negative prices to ensure data integrity.

Best Practice: Organizing transformations into individual, readable steps makes the code more maintainable and easier to debug.

5. Loading Data into a Database

Setting Up a Database Connection with SQLAlchemy

Once the data is cleaned, the next step is to load it into a database. In this example, we’ll use SQLAlchemy to load the data into a MySQL database.

Database Connection Code

from sqlalchemy import create_engine

# Connect to the MySQL database

def get_db_connection():

try:

engine = create_engine("mysql+mysqlconnector://username:password@localhost/db_name")

print("Database connection successful!")

return engine

except Exception as e:

print(f"Database connection error: {e}")

return None

# Insert data into MySQL

def load_data(data, engine):

try:

data.to_sql('products', con=engine, if_exists='replace', index=False)

print("Data loaded successfully!")

except Exception as e:

print(f"Error loading data: {e}")

# Example usage

engine = get_db_connection()

if engine:

load_data(cleaned_data, engine)

Explanation:

-

Database Connection: The

get_db_connectionfunction establishes a connection to the database using SQLAlchemy. -

Data Loading: The

load_datafunction loads data into theproductstable, replacing it if it already exists.

Security Note: Use environment variables or a password manager for credentials in production environments.

6. Automating the ETL Process with Airflow

With Apache Airflow, we can automate the ETL pipeline, ensuring it runs on a schedule or in response to specific events. Airflow allows us to define workflows as directed acyclic graphs (DAGs).

Example of an Airflow DAG for ETL

This example demonstrates a basic DAG in Airflow that executes the extract, transform, and load steps.

Code for Airflow DAG

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

from datetime import datetime

# Define DAG

dag = DAG(

'etl_pipeline',

description='ETL pipeline using Python and Airflow',

schedule_interval='@daily',

start_date=datetime(2023, 1, 1),

catchup=False,

)

# Airflow tasks

extract_task = PythonOperator(

task_id='extract_data',

python_callable=extract_data,

op_kwargs={'file_path': 'products.csv'},

dag=dag,

)

transform_task = PythonOperator(

task_id='transform_data',

python_callable=transform_data,

op_kwargs={'data': raw_data},

dag=dag,

)

load_task = PythonOperator(

task_id='load_data',

python_callable=load_data,

op_kwargs={'data': cleaned_data, 'engine': engine},

dag=dag,

)

# Task dependencies

extract_task >> transform_task >> load_task

Explanation:

-

DAG Definition: The DAG object defines the ETL pipeline and its schedule (

@daily). -

Tasks: We define each step of the ETL pipeline (

extract_data,transform_data,load_data) as a PythonOperator. - Dependencies: Each task depends on the previous one, creating a linear flow from extraction to loading.

Running the DAG

Start Airflow and run the DAG from the Airflow UI to see the automated ETL pipeline in action.

7. Best Practices and Clean Code Principles for ETL

7.1 Clean Code Principles

- Modularization: Separate each step (extract, transform, load) into distinct functions to make code reusable and easier to maintain.

- Error Handling: Implement error handling in each function to capture and log any issues, improving reliability.

- Documentation: Document each function with comments to improve readability and understanding.

7.2 Best Practices for ETL Pipelines

- Data Validation: Always validate the data in the transformation step to avoid inconsistencies in the final database.

- Secure Credentials: Use environment variables for database credentials to enhance security.

- Monitor ETL Jobs: Regularly monitor ETL pipeline performance using tools like Airflow’s logging and Grafana to identify bottlenecks.

- Optimize Data Loading: Use batch processing if dealing with large datasets to improve database load times.

Following these principles creates a clean, efficient, and scalable ETL pipeline that is easier to debug and maintain over time.

Conclusion

Building an ETL pipeline in Python requires careful planning, modularization, and adherence to clean code principles to ensure efficiency and scalability. In this guide, we used Python libraries like pandas and SQLAlchemy to process data and automate workflows with Airflow. This modular ETL approach is ideal for data engineers managing pipelines, as it ensures consistent data quality and reduces operational overhead.

With the skills acquired in this guide, you are equipped to build robust ETL pipelines that scale with business needs and maintain data integrity, making valuable insights available to decision-makers.